During a time when Artificial Intelligence seems to be everywhere, understanding how and when to use it has become more important than ever.

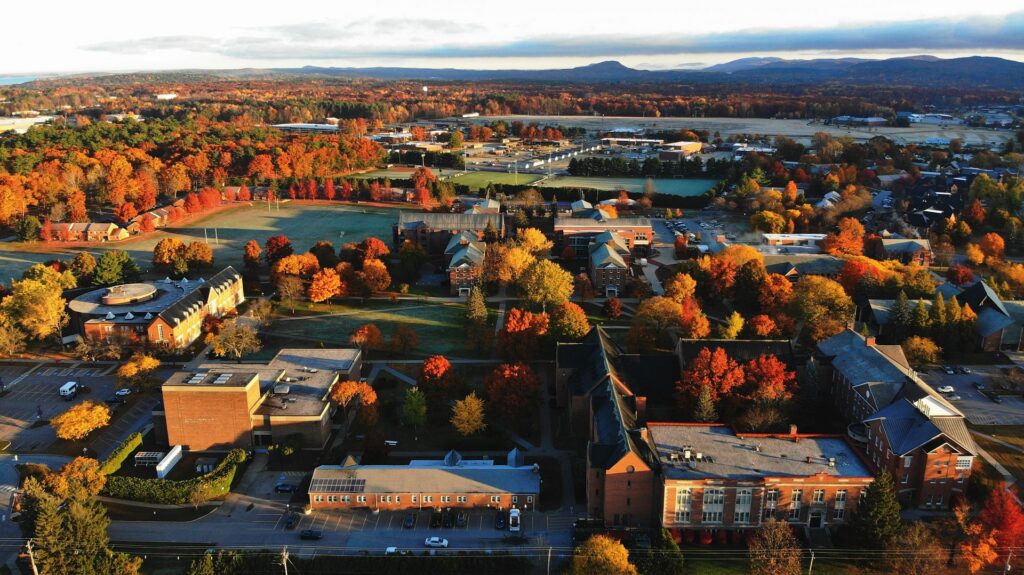

Saint Michael’s College took that mission to heart with a recent event on campus exploring this evolving digital tool.

First-year students spent the summer reading AI Snake Oil: What Artificial Intelligence Can Do, What it Can’t, and How to Tell the Difference, which Narayanan co-wrote with one of his Ph.D. students. Saint Michael’s community members had the chance to hear directly from Narayanan and ask him questions about his research and his thoughts about the future of AI..

AI Snake Oil was this year’s common text for the Class of 2029, which serves as a common basis for discussion in students’ First-Year Seminar classes.

Computer scientist Dr. Arvind Narayanan of Princeton University speaks at Saint Michael’s College on Sept. 11, 2025. (Photo by Sophie Burt ’26)

Distinguishing tools vs. snake oil

Narayanan’s book and talk aimed to peel back the curtain on one of today’s most common and confusing technologies. He and his co-author Sayash Kapoor argue that we commonly misunderstand the abilities that AI does and does not have – and this lack of understanding can be dangerous.

Saint Michael’s College Professor Kristin Dykstra, director of the first-year seminar program, introduced Narayanan, reminding her audience why his work is crucial.

“We do need smart, important voices to keep coming regarding both the benefits and limitations of these tools that are appearing as arrival technologies,” Dykstra said.

The starting point of Narayanan’s talk did not cover Gemini, Chat GPT, self-driving cars, or Siri, but rather something more commonly overlooked that poses a larger problem than people might understand: interview hiring software.

Around 2018, Narayanan began investigating artificial intelligence companies that introduced a technology to help employers sift through interview applicants using AI. These technologies would require candidates to submit 30-second interview videos that would be examined by this software and result in a numerical “personality score” that could be used in the hiring process.

“As a computer scientist, it didn’t seem like there was any possible way this could work,” Narayanan said.

This suspicion proved to be correct when investigative journalists were able to access this AI technology and try it out for themselves. Narayanan stated that these journalists altered their videos to be ever so slightly different from one another – which would each result in a different personality score.

Professor Kristin Dykstra, director of the First-Year Seminar Program at Saint Michael’s College, introduces Dr. Arvind Narayanan during an event on Sept. 11, 2025. (Photo by Sophie Burt ’26)

For example, Narayanan said one of the photos had “been digitally altered to add a bookshelf in the background. One of the other alterations they made is the use of glasses versus no glasses. Everything else in the video is the same.”

The results of these two videos were “massively different,” Narayanan explained. The AI technology was ultimately picking up on superficial stereotypes in its data.

From this understanding, Narayanan was able to develop the “first big idea” of their book.

“We use the word AI to talk about too many things that have relatively little, so we need to get more specific,” he said.

Exploring the different types of AI

Narayanan warned the audience that “predictive AI,” as seen in forms of technology like the interview software, is unreliable in high-stakes decisions. Another example of this unreliability he cited was from an investigation by ProPublica published in 2016. The publication revealed that criminal justice systems were using predictive AI software to determine a defendant’s likelihood of committing a future crime. The investigation proved that this technology was not only racially biased but also broadly inaccurate

“It should be common sense that it’s hard to predict the future,” Narayanan said. “Because this is presented with a veneer of technological accuracy, we have somehow, as a society, accepted the idea of incarcerating people as a way to prevent future crime, as opposed to after a determination of guilt.”

On the other hand, Narayanan presented “generative AI” with a sense of optimism – though with the caveat that it is important to remain cautious of new technologies. Narayanan presented a lighthearted example of using generative AI to create a fun and engaging math game to help his six-year-old daughter practice fractions.

Dr. Arvind Narayanan of Princeton University speaks about the benefits and pitfalls of AI during an event on Sept. 11, 2025. (Photo by Sophie Burt ’26)

However, this optimism was also met with warnings stating that individuals in the marketplace have used this form of generative AI to falsely market and sell books as well as produce misleading or low-quality content.

“Someone relies on that and doesn’t recognize that this is an unreliable tool that doesn’t really have a source of truth,” he said.

Narayanan said there have been instances where generative AI has caused immediate danger to individuals.

“There have been suicides where one important factor was the person being in poor mental health and developing a companionship with a chatbot, and the chatbot going off the rails and encouraging their suicide attempts,” Narayanan said.

Ethics and realities of using AI

Throughout his presentation, Narayanan continued to return to the important theme that AI, specifically generative AI, is neither morally good nor bad. He explained that the most crucial part is how this technology is used.

Narayanan said he feels confident and comfortable asking AI to generate code as a computer scientist, but only because he has years of experience and expertise in this field and can catch potential mistakes. He compared using AI shortcuts in the classroom and workplace to bringing a forklift into the gym.

“The point of lifting weights isn’t to move them from place A to place B,” he said. “It’s to build strength. Learning is the same way. Even if an AI tool can write your essay, you need the mental workout of writing it yourself.”

Physics Professor Alain Brizard, second from right, asks Dr. Arvind Narayanan a question about artificial intelligence during an event on Sept. 11, 2025. (Photo by Sophie Burt ’26)

Narayanan concluded his talk with a forward-looking note about AI in the workforce – something, he hoped, that would ease audience members’ minds. He said that while AI will continue to automate certain jobs and positions, it will also open opportunities for different work.

“When automatic teller machines (ATMs) were introduced, surprisingly, the employment of bank tellers actually went up for many, many decades,” Narayanan said. “When that happens, the nature of the job changes.”

This, Narayanan said, is why humans will still have jobs to do: “The job description changes, so that people focus on the things that cannot yet be automated, and on new things that they can now do.”

For all press inquiries contact Elizabeth Murray, Associate Director of Communications at Saint Michael's College.